According to the latest reports, the Korean police are investigating a digital sex crime involving the use of AI (artificial intelligence) and Deepfake technology. The crime happened at a middle school in Busan.

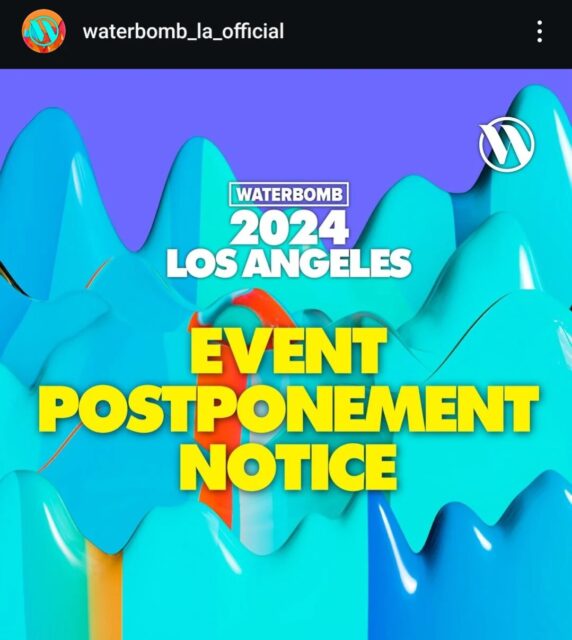

On August 21, the Busan Metropolitan City Office of Education reported that a complaint was filed with the police against four students from a middle school in Haeundae, Busan, for creating and distributing pornographic material. The students allegedly used deepfake technology to commit this crime.

The police are investigating the allegations that claim that the students created and shared nearly 80 pornographic pictures of 18 female students from the same school by superimposing their faces on body images using AI. This incident happened in June.

The School Violence Response Committee only punished them with suspended attendance for 12 to 20 days and 5 hours of special education for the accused four. Additionally, one of the students who showed positive initiative was transferred to another class.

The news has angered netizens beyond comprehension and many are considering such incidents as an alarming sign of a societal collapse in the country.

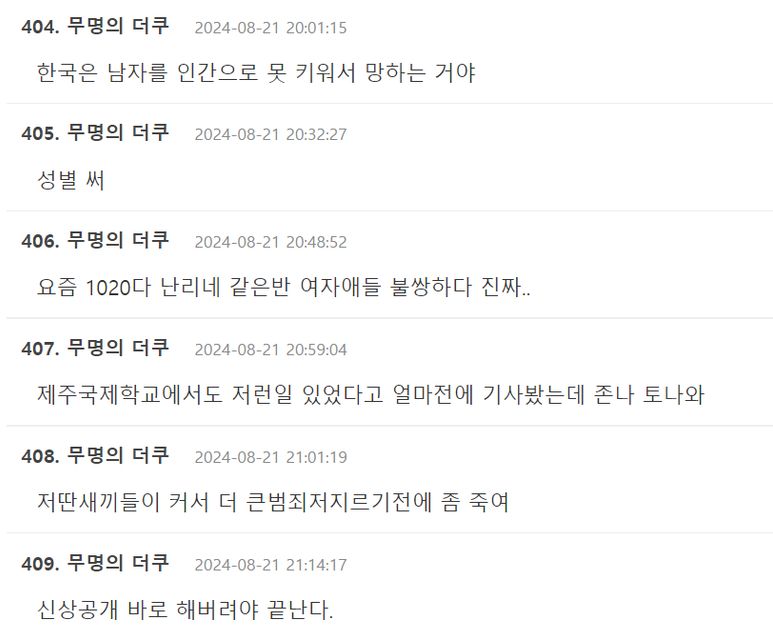

- “Korea is ruined because it couldn’t raise its men to be human beings.”

- “Write down the genders.”

- “These days, people in their teens and 20s are all causing trouble. I feel really bad for the women in those classes.”

- “I read an article a while ago that said something like this happened at Jeju International School, and it p*ssed me off so much.”

- “Kill those b*stards before they grow up and become bigger criminals.”

- “It will be over at once if you reveal the identities.”

Busan has recently seen an uptick in teenagers and young adults using AI technology to commit sex crimes online. Recently, a prestigious university in the city came under fire after the police uncovered a massive sex crime chat room operated by its students, targeting female alums and students with deepfake images. Read more about it here.

Source: Theqoo